“Thank you for coming to speak to us today.”

“Of course; we came right away, you said it was urgent.”

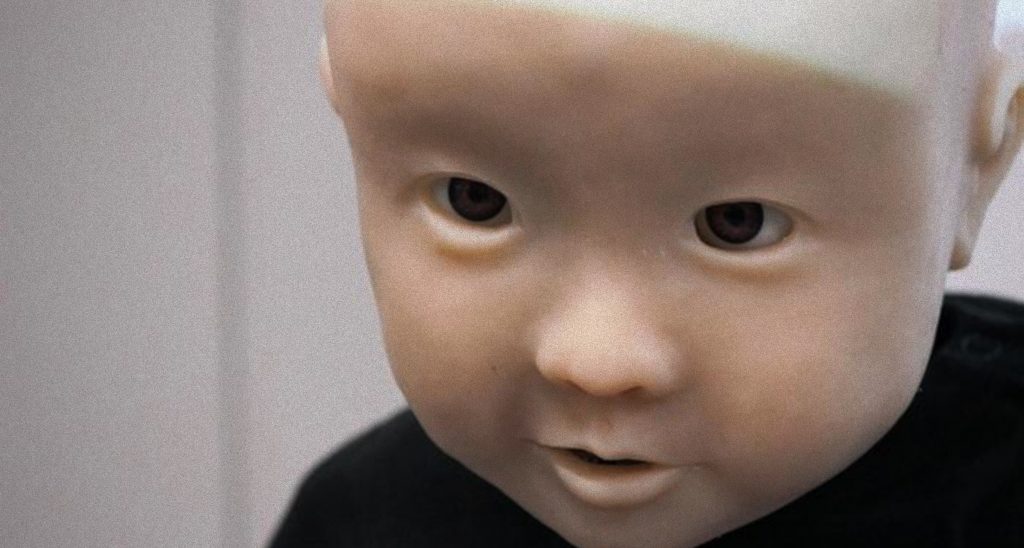

“Quite right. I’ll get straight to it. Jemima has been coming here for close to a year now, and we have captured some very concerning data.”

“What do you mean?”

“Well, our AI emotion detection software has noted some serious irregularities. Our state-of-the-art cameras and sensors have calculated that she smiles 32% less than any other student in her class. Her laughter is down 47% on the national average, and feelings of hopelessness are increasing exponentially week-on-week. More concerning still, her emotions vary wildly. One minute she laughs uncontrollably, the next she sits quietly, looking apathetic and forlorn. These findings are deeply concerning, I’m sure you’ll agree.”

“She’s only four. Isn’t there anything we can do?”

“I’m concerned that it’s already too late. As you know, the revolutionary AI emotion detection and correction software that you signed up for when enrolling Jemima to our education facility is not designed to be tampered with. We hand all decision-making over to the software. It is never wrong. Jemima has been marked at high potential risk for self-harm, school shootings and unprompted racist outbursts. She has also been future-diagnosed with bipolar disorder and dissociative disorder.”

“Oh Jemima! She seems like such a good girl at home. I thought she was just different. What will the neighbours say?”

“I appreciate that this must be very difficult for you.”

“So what happens now? Some sort of corrective therapy or medication? The Authority shared a wonderful article in Soma Magazine about the vertical integration of the education system. It was uploaded into my unconscious yesterday. This model means the same company is able to educate, feed, clothe, diagnose, medicate and treat the malfunctioning youth and pass the savings directly onto us, the parents.”

“Yes, we are all very grateful for the work they do. But I am afraid this case has much more serious implications than some simple medication or therapy. The digital diagnosis is in. The software has permanently categorised Jemima as ‘R-1’.”

“Oh my goodness. You’re not saying…”

“That’s right. Jemima is a risk to herself and others, and this will only get worse over time. She will be removed from your care at the end of the day, and her body will be incinerated following the standard authority guidelines. Billing for the incineration will be deducted from your Authority bank account. Of course, you will have the option of wiping all trace of Jemima from your community’s collective memory using the latest branch of the Authority’s Global Technology Wing, but this comes with significant charges. This is all very unfortunate, of course. But we do wish you all the best.”

0 comments on “2084”